The primary purpose of AI Field Trials (hereafter referred to as Trials) is to assess different workflows and tools when applied to specific use cases. This approach establishes a baseline for performance and enables comparison of various solutions.

A secondary purpose is to track rankings for each AI tool across all applied use cases and workflows. While individual trials may yield favorable results for a given tool, general tool rankings provide a more comprehensive performance overview. These rankings become increasingly statistically significant as a tool undergoes more trials against other tools. Consequently, rankings represent top-performing tools across all applications or within specific categories.

As an independent publisher, Word of Lore maintains no affiliation with any of the AI tools mentioned in its stories and workflows. This independence allows the organization to strive for an unbiased view of state-of-the-art AI technologies.

Trials are conducted on a schedule and pit head-to-head workflows that fall under the same or similar use cases. While the Trials are typically comprehensive and diverse in terms of available workflows at the time of writing, they are not intended to be exhaustive.

Terminology

AI Field Trial: A tournament-style evaluation of AI solution performance, where multiple solutions are compared in a structured format.

AI Tool: Any model or tool that leverages artificial intelligence (AI) or machine learning (ML) models. This may also include technologies using statistical methods to solve problems or other relevant applications.

Workflow: A step-by-step orchestration of AI Tools designed to provide a solution to a specific use case or problem.

Use Case or AI Problem: A real-world scenario that requires a solution, automation, or augmentation through AI technologies.

Solution: A concrete workflow for addressing a particular use case or problem using one or more AI tools.

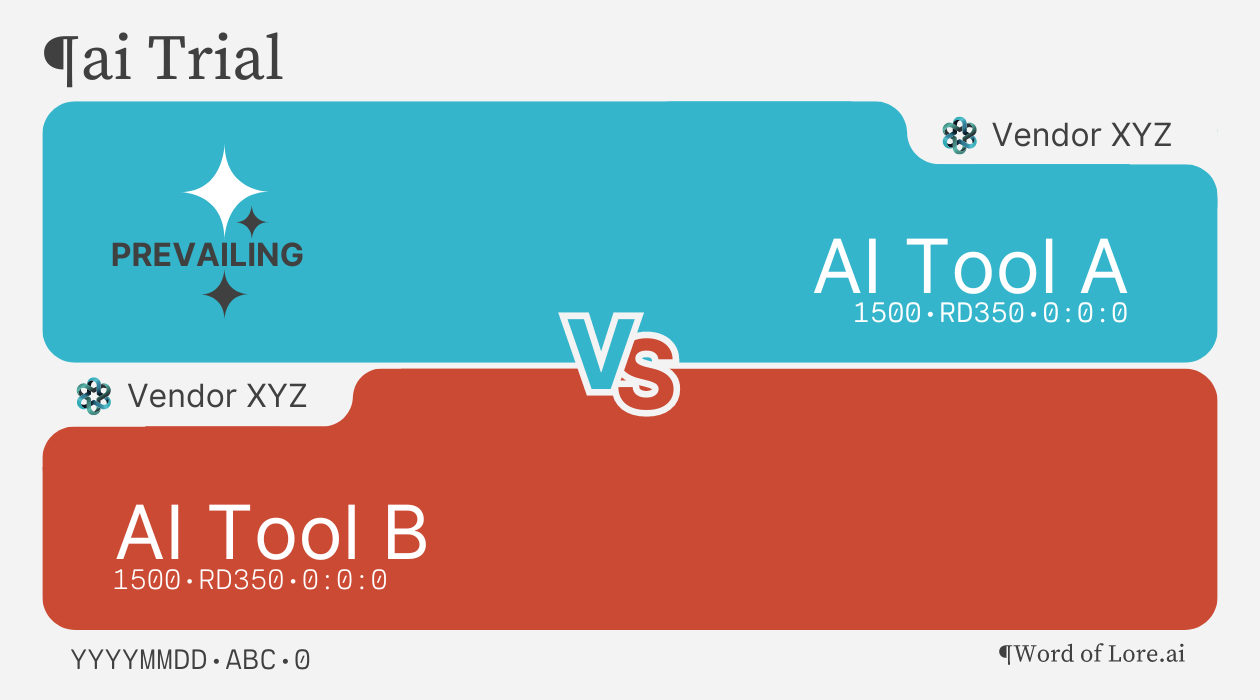

Face-Off: A single comparison between two AI workflows in a particular trial.

Round: A stage of a trial where contestants are paired together, with each contestant participating in only one face-off per round.

Rating Period: a period of time within which ratings are locked and updated only on the date of period maturity.

Face-Offs and Rounds

The Trials employ a one-versus-one assessment format. Each evaluation compares one solution against another. A participant may be a single AI tool or a set of tools. There is no limit to the number of tools that can form a single solution.

The Trials use a Swiss pairing system to ensure equal opportunity, reduce bias, and accurately assess contestants' performance. This format provides continuous rating updates at predefined intervals, ensuring fair adjustments throughout the trial.

The Trial process is designed to offer dynamic statistical measurements of the effectiveness of AI tools (solutions) applied to real-world use cases (problems). The method for computing ratings is described on the AI Ratings page.

Face-Off Outcomes

There are three possible outcomes for each face-off:

- Prevailing AI: This is declared when there's a clear winner. Ratings are adjusted according to the procedure outlined on the AI Ratings page.

- Co-Proficient AIs (a positive draw): This is declared when both contestants perform equally or near-equally well, with both satisfying the trial use case.

- Mutually Suboptimal AIs (a negative draw): This is declared when both contestants perform equally or near-equally poorly, with both falling short of satisfying the trial use case. While this outcome is typically rare due to preliminary selection and qualification for trials, it remains a possible result.

For either type of draw, the overall rating isn't adjusted, but the rating deviation may be modified.

Trial Cards

After each face-off, Word of Lore produces a Trial Card and publishes it along with the results. The card looks something like this:

For details about the information on the trial card, refer to the Trial Cards page.

Common Questions

What rating is considered good, and what rating indicates suboptimal performance?

There isn't a specific rating number that definitively indicates good or suboptimal performance. The rating system is not designed to measure absolute performance. Instead, it measures relative performance among the AI tools and solutions being evaluated.

How often are Trials conducted?

Trials are conducted on an ongoing basis, with results released continuously as they become available. Word of Lore updates the relevant Trial Nomination page to show how AI Tools are paired and the sequence of result releases. However, exact release dates for specific trial results are not provided.

Can anyone submit an AI tool or workflow for evaluation in the Trials?

Yes, members of the Word of Lore community can submit their nominations and workflows for evaluation in the Trials. Submissions are made through the comments section of the appropriate Trial Nomination page.

How are use cases for Trials selected?

Use cases for Trials are carefully curated by the Word of Lore editors. Each selected use case must satisfy a real-world utility test. Only workflows and use cases that demonstrate genuine practical usefulness are chosen for the Trials.

By focusing on practical applications, the Trials provide valuable insights into the performance of AI tools and workflows in contexts that matter to users and developers.

Is there a minimum number of face-offs or rounds an AI tool must participate in before receiving a meaningful rating?

There isn't a strict minimum number of face-offs or rounds required for an AI tool to receive a meaningful rating. Instead, Word of Lore uses a dynamic rating system that evolves with participation:

- New tools are initially assigned a provisional rating of 1500 with a deviation of 350.

- As a tool participates in more trials, its rating deviation gradually decreases.

- The decrease in deviation results in the rating becoming more representative of the tool's actual performance over time.

This approach allows new tools to enter the evaluation process quickly while ensuring that their ratings become increasingly accurate and meaningful as they participate in more face-offs. The system balances the need for immediate feedback with the requirement for statistical reliability in the ratings.

It's worth noting that while a tool may receive a rating after its first face-off, users should consider the associated deviation when interpreting the rating's significance. Ratings with lower deviations generally provide a more reliable indication of a tool's performance relative to others in the Trials.

If you have any questions, feel free to reach out to humans@wordoflore.ai